A consistent focus of our blog is the technical and theoretical issues that emerge in the world of digital preservation. For example, we have explored the challenges archivists face when they have to appraise collections in order to select what materials are kept, and what are thrown away. Such complex questions take on specific dimensions within the world of digital preservation.

If you work in digital preservation then the term ‘significant properties’ will no doubt be familiar to you. The concept has been viewed as a hindrance due to being shrouded by foggy terminology, as well as a distinct impossibility because of the diversity of digital objects in the world which, like their analogue counterparts, cannot be universally generalised or reduced to a series of measurable characteristics.

In a technical sense, establishing a set of core characteristics for file formats has been important for initiatives like Archivematica, ‘a free and open-source digital preservation system that is designed to maintain standards-based, long-term access to collections of digital objects.’ Archivematica implement ‘default format policies based on an analysis of the significant characteristics of file formats.’ These systems manage digital information using an ‘agile software development methodology’ which ‘is focused on rapid, iterative release cycles, each of which improves upon the system’s architecture, requirements, tools, documentation, and development resources.’

Such a philosophy may elicit groans of frustration from information managers who may well want to leave their digital collections alone, and practice a culture of non-intervention. Yet this adaptive-style of project management, which is designed to respond rapidly to change, is often contrasted with predictive development that focuses on risk assessment and the planning of long-term projects. The argument against predictive methodologies is that, as a management model, it can be unwieldy and unresponsive to change. This can have damaging financial consequences, particularly when investing in expensive, risky and large scale digital preservation projects, as the BBC’s failed DMI initiative demonstrates.

Indeed, agile software development methodology may well be an important key to the sustainability of digital preservation systems which need to find practical ways of maneuvering technological innovations and the culture of perpetual upgrade. Agility in this context is synonymous with resilience, and the practical application of significant properties as a means to align file format interoperability offers a welcome anchor for a technological environment structured by persistent change.

Significant properties vs the authentic digital object

What significant properties imply, as archival concept and practice, is that desiring authenticity for the digitised and born-digital objects we create is likely to end in frustration. Simply put, preserving all the information that makes up a digital object is a hugely complex affair, and is a procedure that will require numerous and context-specific technical infrastructures.

As Trevor Owens explains: ‘you can’t just “preserve it” because the essence of what matters about “it” is something that is contextually dependent on the way of being and seeing in the world that you have decided to privilege.’ Owens uses the example of the Geocites web archiving project to demonstrate that if you don’t have the correct, let’s say ‘authentic’ tools to interpret a digital object (in this case, a website that is only discernible on certain browsers), you simply cannot see the information accurately. Part of the signal is always missing, even if something ‘significant’ remains (the text or parts of the graphics).

It may be desirable ‘to preserve all aspects of the platform in order to get at the historicity of the media practice’, Jonathan Sterne, author of MP3: Meaning of a Format suggests, but in a world that constantly displaces old technological knowledge with new, settling for the preservation of significant properties may be a pragmatic rather than ideal solution.

Analogue to digital issues

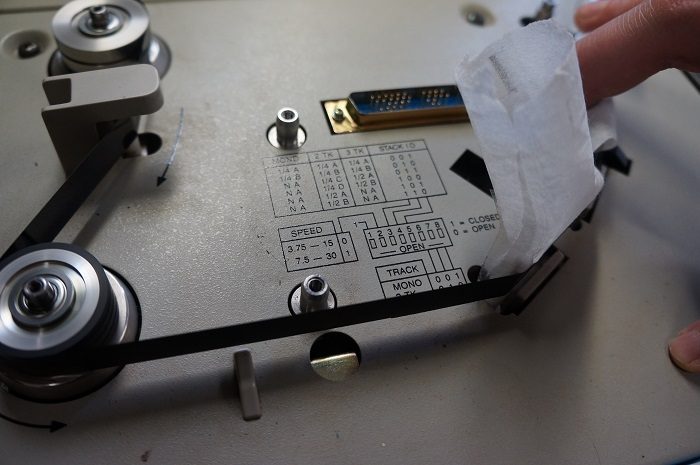

To bring these issues back to the tape we work we with at Great Bear, there are of course times when it is important to use the appropriate hardware to play the tapes back, and there is a certain amount of historically specific technical knowledge required to make the machines work in the first place. We often wonder what will happen to the specialised knowledge learnt by media engineers in the 70s, 80s and 90s, who operated tape machines that are now obsolete. There is the risk that when those people die, the knowledge will die with them. Of course it is possible to get hold of operating manuals, but this is by no means a guarantee that the mechanical techniques will be understood within a historical context that is increasingly tape-less and software-based. By keeping our wide selection of audio and video tape machines purring, we are sustaining a machinic-industrial folk knowledge which ultimately helps to keep our customer’s magnetic tape-based, media memories, alive.

Of course a certain degree of historical accuracy is required in the transfers because, very obviously, you can’t play a V2000 tape on a VHS machine, no matter how hard you try!

Yet the need to play back tapes on exactly the same machine becomes less important in instances where the original tape was recorded on a domestic reel-to-reel recorder, such as the Grundig TK series, which may not have been of the greatest quality in the first place. To get the best digital transfer it is desirable to play back tapes on a machine with higher specifications that can read the magnetic information on the tape as fully as possible. This is because you don’t want to add any more errors to the tape in the transfer process by playing it back on a lower quality machine, which would then of course become part of the digitised signal.

It is actually very difficult to remove things like wow and flutter after a tape has been digitised, so it is far better to ensure machines are calibrated appropriately before the tape is migrated, even if the tape was not originally recorded on a machine with professional specifications. What is ultimately at stake in transferring analogue tape to digital formats is the quality of the signal. Absolute authenticity is incidental here, particularly if things sound bad.

The moral of this story, if there can be one, is that with any act of transmission, the recorded signal is liable to change. These can be slight alterations or huge drop-outs and everything in-between. The agile software developers know that given the technological conditions in which current knowledge is produced and preserved, transformation is inevitable and must be responded to. Perhaps it is realistic to assume this is the norm in society today, and creating digital preservation systems that are adaptive is key to the survival of information, as well as accepting that preserving the ‘full picture’ cannot always be guaranteed.

[…] Significant Properties—Technical Challenges for Digital Preservation […]