What is the most effective way to store and manage digital data in the long term? This is a question we have given considerable attention to on this blog. We have covered issues such as analogue obsolescence, digital sustainability and digital preservation policies. It seems that as a question it remains unanswered and up for serious debate.

We were inspired to write about this issue once again after reading an article that was published in the New Scientist a year ago called ‘Cassette tapes are the future of big data storage.’ The title is a little misleading, because the tape it refers to is not the domestic audio tape that has recently acquired much counter cultural kudos, but rather archival tape cartridges that can store up to 100 TB of data. How much?! I hear you cry! And why tape given the ubiquity of digital technology these days? Aren’t we all supposed to be ‘going tapeless’?

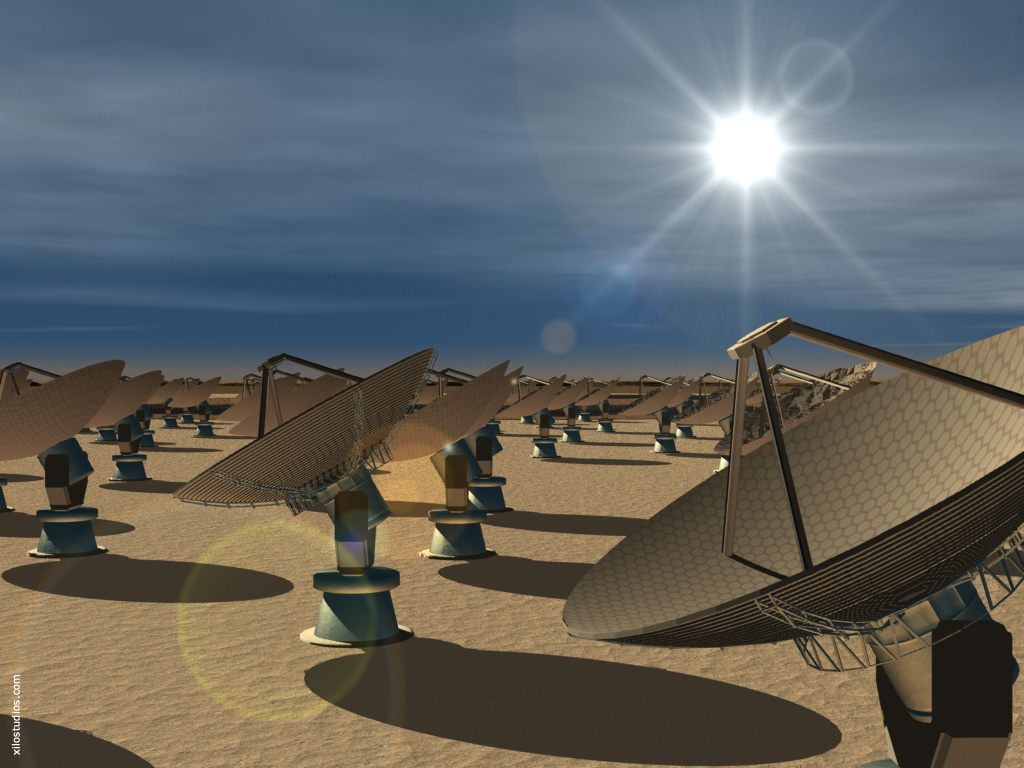

The reason for such an invention, the New Scientist reveals, is the ‘Square Kilometre Array (SKA), the world’s largest radio telescope, whose thousands of antennas will be strewn across the southern hemisphere. Once it’s up and running in 2024, the SKA is expected to pump out 1 petabyte (1 million gigabytes) of compressed data per day.’

Image of the SKA dishes

Researchers at Fuji and IBM have already designed a tape that can store up to 35TB, and it is hoped that a 100TB tape will be developed to cope with the astronomical ‘annual archive growth [that] would swamp an experiment that is expected to last decades’. The 100TB cartridges will be made ‘by shrinking the width of the recording tracks and using more accurate systems for positioning the read-write heads used to access them.’

If successful, this would certainly be an advanced achievement in material science and electronics. Smaller tape width means less room for error on the read-write function – this will have to be incredibly precise on a tape that will be storing a pretty extreme amount of information. Presumably smaller tape width will also mean there will be no space for guard bands either. Guard bands are unrecorded areas between the stripes of recorded information that are designed to prevent information interference, or what is known as ‘cross-talk‘.They were used on larger domestic video tapes such as U-Matic and VHS, but were dispensed with on smaller formats such as the Hi-8, which had a higher density of magnetic information in a small space, and used video heads with tilted gaps instead of guard bands.

The existence of SKA still doesn’t explain the pressing question: why develop new archival tape storage solutions and not hard drive storage?

Hard drives were embraced quickly because they take up less physical storage space than tape. Gone are the dusty rooms bursting with reel upon reel of bulky tape; hello stacks of infinite quick-fire data, whirring and purring all day and night. Yet when we consider the amount of energy hard drive storage requires to remain operable, the costs – both economic and ecological – dramatically increase.

The report compiled by the Clipper Group published in 2010 overwhelmingly argues for the benefits of tape over disk for the long term archiving of data. They state that ‘disk is more than fifteen times more expensive than tape, based upon vendor-supplied list pricing, and uses 238 times more energy (costing more than the all costs for tape) for an archiving application of large binary files with a 45% annual growth rate, all over a 12-year period.’

This is probably quite staggering to read, given the amount of investment in establishing institutional architecture for tape-less digital preservation. Such an analysis of energy consumption does assume, however, that hard drives are turned on all the time, when surely many organisations transfer archives to hard drives and only check them once every 6-12 months.

Yet due to the pressures of technological obsolescence and the need to remain vigilant about file operability, coupled with the functional purpose of digital archives to be quickly accessible in comparison with tape that can only be played back linearly, such energy consumption does seem fairly inescapable for large institutions in an increasingly voracious, 24/7 information culture. Of course the issue of obsolescence will undoubtedly affect super-storage-data tape cartridges as well. Technology does not stop innovating – it is not in the interests of the market to do so.

Perhaps more significantly, the archive world has not yet developed standards that address the needs of digital information managers. Henry Newman’s presentation at the Designing Storage Architectures 2013 conference explored the difficulty of digital data management, precisely due to the lack of established standards:

- ‘There are some proprietary solutions available for archives that address end to end integrity;

- There are some open standards, but none that address end to end integrity;

- So, there are no open solutions that meet the needs of [the] archival community.’

He goes on to write that standards are ‘technically challenging’ and require ‘years of domain knowledge and detailed understanding of the technology’ to implement. Worryingly perhaps, he writes that ‘standards groups do not seem to be coordinating well from the lowest layers to the highest layers.’ By this we can conclude that the lack of streamlined conversation around the issue of digital standards means that effectively users and producers are not working in synchrony. This is making the issue of digital information management a challenging one, and will continue to be this way unless needs and interests are seen as mutual.

Other presentations at the recent annual meeting for Designing Storage Architectures for Digital Collections which took place on September 23-24, 2013 at the Library of Congress, Washington, DC, also suggest there are limits to innovation in the realm of hard drive storage. Gary Decad, IBM, delivered a presentation on the ‘The Impact of Areal Density and Millions of Square Inches of Produced Memory on Petabyte Shipments for TAPE, NAND Flash, and HDD Storage Class‘.

For the lay (wo)man this basically translates as the capacity to develop computer memory stored on hard drives. We are used to living in a consumer society where new improved gadgets appear all the time. Devices are getting smaller and we seem to be able buy more storage space for cheaper prices. For example, it now costs under £100 to buy a 3TB hard drive, and it is becoming increasingly more difficult to purchase hard drives which have less than 500GB storage space. Compared with last year, a 1TB hard drive was the top of the range and would have probably cost you about £100.

Does my data look big in this?

Yet the presentation from Gary Decad suggests we are reaching a plateau with this kind of storage technology – infinite memory growth and reduced costs will soon no longer be feasible. The presentation states that ‘with decreasing rates of areal density increases for storage components and with component manufactures reluctance to invest in new capacity, historical decreases in the cost of storage ($/GB) will not be sustained.’

Where does that leave us now? The resilience of tape as an archival solution, the energy implications of digital hard drive storage, the lack of established archival standards and a foreseeable end to cheap and easy big digital data storage, are all indications of the complex and confusing terrain of information management in the 21st century. Perhaps the Clipper report offers the most grounded appraisal: ‘the best solution is really a blend of disk and tape, but – for most uses – we believe that the vast majority of archived data should reside on tape.’ Yet it seems until the day standards are established in line with the needs of digital information managers, this area will continue to generate troubling, if intriguing, conundrums.

Post published Nov 18, 2013