In a blog post a few weeks ago we reflected on several practical and ethical questions emerging from our digitisation work. To explore these issues further we decided to take an in-depth look at the British Library’s Digital Preservation Strategy 2013-2016 that was launched in March 2013. The British Library is an interesting case study because they were an ‘early adopter’ of digital technology (2002), and are also committed to ensuring their digital archives are accessible in the long term.

Making sure the UK’s digital archives are available for subsequent generations seems like an obvious aim for an institution like the British Library. That’s what they should be doing, right? Yet it is clear from reading the strategy report that digital preservation is an unsettled and complex field, one that is certainly ‘not straightforward. It requires action and intervention throughout the lifecycle, far earlier and more frequently than does our physical collection (3).’

The British Library’s collection is huge and therefore requires coherent systems capable of managing its vast quantities of information.

‘In all, we estimate we already have over 280 terabytes of collection content – or over 11,500,000 million items – stored in our long term digital library system, with more awaiting ingest. The onset of non-print legal deposit legislation will significantly increase our annual digital acquisitions: 4.8 million websites, 120,000 e-journal articles and 12,000 e-books will be collected in the first year alone (FY 13/14). We expect that the total size of our collection will increase massively in future years to around 5 petabytes [that’s 5000 terabytes] by 2020.’

All that data needs to be backed up as well. In some cases valuable digital collections are backed up in different locations/ servers seven times (amounting to 35 petabytes/ 3500 terabytes). So imagine it is 2020, and you walk into a large room crammed full of rack upon rack of hard drives bursting with digital information. The data files – which include everything from a BWAV audio file of a speech by Natalie Bennett, leader of the Green Party after her election victory in 2015, to 3-D data files of cunieform scripts from Mesopotamia, are constantly being monitored by algorithms designed to maintain the integrity of data objects. The algorithms measure bit rot and data decay and produce further volumes of metadata as each wave of file validation is initiated. The back up systems consume large amounts of energy and are costly, but in beholding them you stand in the same room as the memory of the world, automatically checked, corrected and repaired in monthly cycles.

Such a scenario is gestured toward in the British Library’s long term preservation strategy, but it is clear that it remains a work in progress, largely because the field of digital preservation is always changing. While the British Library has well-established procedures in place to manage their physical collections, they have not yet achieved this with their digital ones. Not surprisingly ‘technological obsolescence is often regarded as the greatest technical threat to preserving digital material: as technology changes, it becomes increasingly difficult to reliably access content created on and intended to be accessed on older computing platforms.’ An article from The Economist in 2012 reflected on this problem too: ‘The stakes are high. Mistakes 30 years ago mean that much of the early digital age is already a closed book (or no book at all) to historians.’

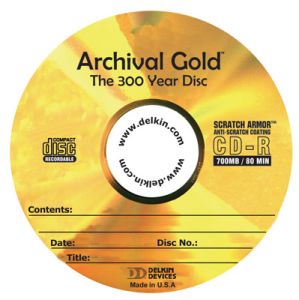

There are also shorter term digital preservation challenges, which encompass ‘everything from media integrity and bit rot to digital rights management and metadata.’ Bit rot is one of those terms capable of inducing widespread panic. It refers to how storage media, in particular optical media like CDs and DVDs, decay over time often because they have not been stored correctly. When bit rot occurs, a small electric charge of a ‘bit’ in memory disperses, possibly altering program code or stored data, making the media difficult to read and at worst, unreadable. Higher level software systems used by large institutional archives mitigate the risk of such underlying failures by implementing integrity checking and self-repairing algorithms (as imagined in the 2020 digital archive fantasy above). These technological processes help maintain ‘integrity and fixity checking, content stabilisation, format validation and file characterisation.’

300 years, are you sure?

Preservation differences between analogue and digital media

The British Library isolate three main areas where digital technologies differ from their analogue counterparts. Firstly there is the issue of ‘proactive lifestyle management‘. This refers to how preservation interventions for digital data need to happen earlier, and be reviewed more frequently, than analogue data. Secondly there is the issue of file ‘integrity and validation.’ This refers to how it is far easier to make changes to a digital file without noticing, while with a physical object it is usually clear if it has decayed or a bit has fallen off. This means there are greater risks to the authenticity and integrity of digital objects, and any changes need to be carefully managed and recorded properly in metadata.

Finally, and perhaps most worrying, is the ‘fragility of storage media‘. Here the British Library explain:

‘The media upon which digital materials are stored is often unstable and its reliability diminishes over time. This can be exacerbated by unsuitable storage conditions and handling. The resulting bit rot can prevent files from rendering correctly if at all; this can happen with no notice and within just a few years, sometimes less, of the media being produced’.

A holistic approach to digital preservation involves taking and assessing significant risks, as well as adapting to vast technological change. ‘The strategies we implement must be regularly re-assessed: technologies and technical infrastructures will continue to evolve, so preservation solutions may themselves become obsolete if not regularly re-validated in each new technological environment.’

Establishing best practice for digital preservation remains a bit of an experiment, and different strategies such as migration, emulation and normalisation are tested to find out what model best helps counter the real threats of inaccessibility and obsolescence we may face in 5-10 years from now. What is encouraging about the British Library’s strategic vision is they are committed to ensuring digital archives are accessible for years to come despite the very clear challenges they face.